On the Depth of Monotone ReLu Neural Networks and ICNNs

| Authors | Egor Bakaev, Florestan Brunck, Christoph Hertrich, Daniel Reichman, Amir Yehudayoff |

| Journal | Submitted |

| Publication | April 2025 |

| Link | Read Article |

| Categories | Neural Networks, Complexity, Polytopes, Geometry |

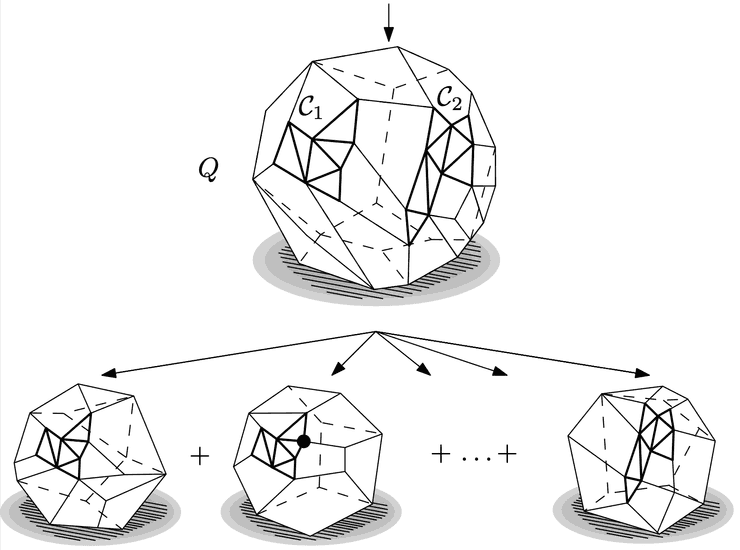

We exploit a beautiful correspondence between ReLU acivated neural networks and polytopes to prove expressivity lower bounds for the depth required in a monotone neural network that exactly computes the maximum of n inputs. We also prove depth separations between ReLU networks and ICNN, namely fo every there exists a depth ReLU network of size that cannot be simulated by a depth- ICNN.

✧